Kubernetes, also known as k8s was built by Google on their experience running containers on production. Kubernetes is open-source, and is one of the best container orchestration technology available.

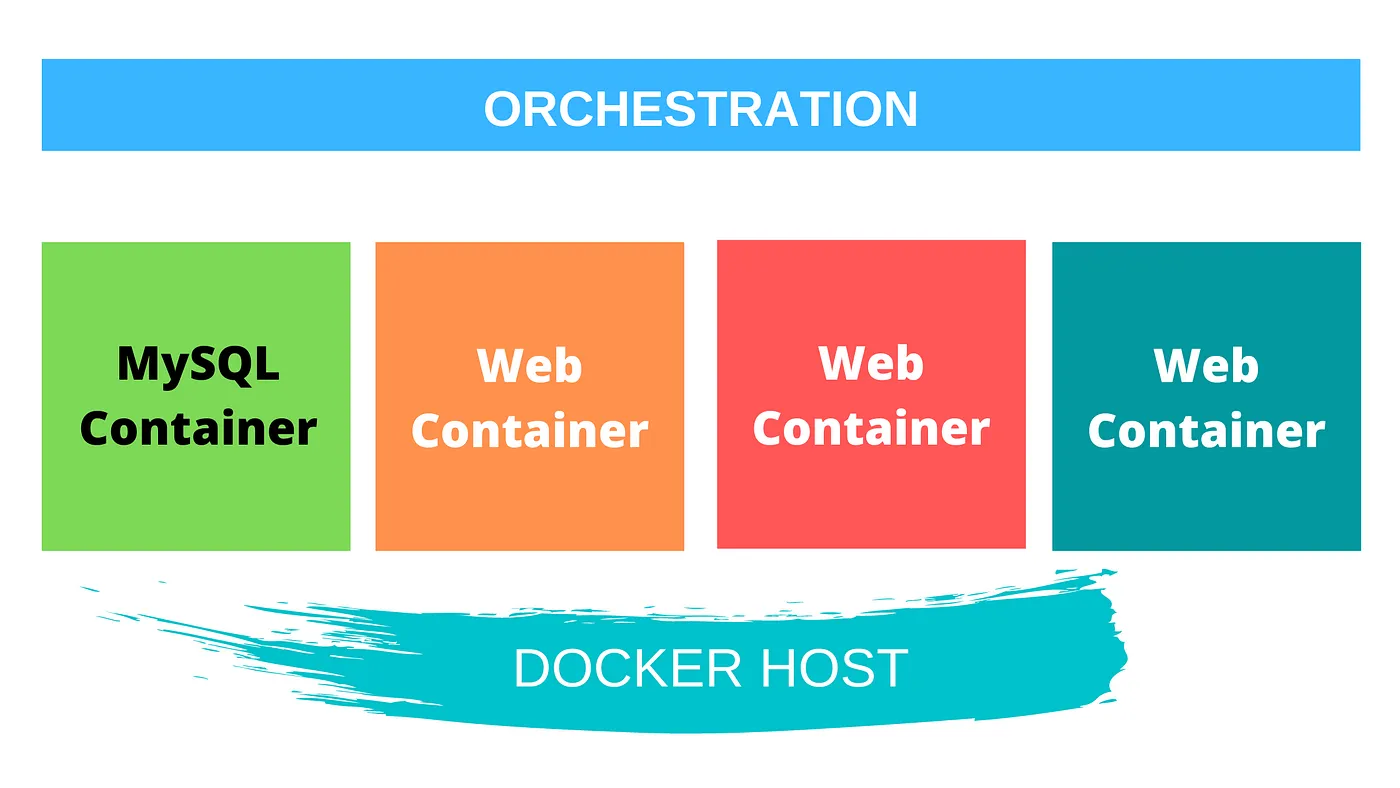

Container Orchestration You have this web application; what happens if the number of users increase and you need to scale your application? How do you scale down automatically when the traffic decreases?

To automate all the upscaling and downscaling, you need an underlying platform with a set of resources and capabilities. This platform needs to orchestrate the connectivity between containers and automatically scale up or down based on the traffic. The configuration for scaling is done using a set of declarative object configuration files.

The whole process of automatically deploying and managing containers is Container Orchestration. It helps to manage the deployment and management of hundreds/thousands of containers in a clustered environment.

The whole process of automatically deploying and managing containers is Container Orchestration. It helps to manage the deployment and management of hundreds/thousands of containers in a clustered environment.

Orchestration Technologies

Kubernetes, by Google is one of the most popular orchestration technology today. Docker has its own technology, Docker Swarm & MESOS by Apache.

Kubernetes, by Google is one of the most popular orchestration technology today. Docker has its own technology, Docker Swarm & MESOS by Apache.

Docker Swarm is easy to use but lacks advanced features for handling complex applications. Kubernetes is a bit difficult to set up compared to Docker swarm, but offers a wide range of features. Kubernetes is supported by all major cloud service providers including AWS, Google GCP and Azure.

KUBERNETES: PROS Application is now available as hardware failure is almost zero as there are multiple instances of the application running on different nodes. The user traffic is load balanced across various containers. When the demand increases, more instances of the application can be deployed seamlessly within seconds at a service level. Number of underlying nodes can be scaled automatically without bringing down the application.

KUBERNETES: Architecture Node: A node is a machine, physical or virtual on which the Kubernetes is installed. It is a worker machine where the containers are launched.

Cluster: Cluster is a set of nodes grouped together. If one node fails, the application is still accessible from another node. Having multiple nodes helps to share the load.

Master: Master is another node with Kubernetes installed and is configured as a master. It watches over the nodes in the cluster and is responsible for the actual orchestration of containers on the worker node.

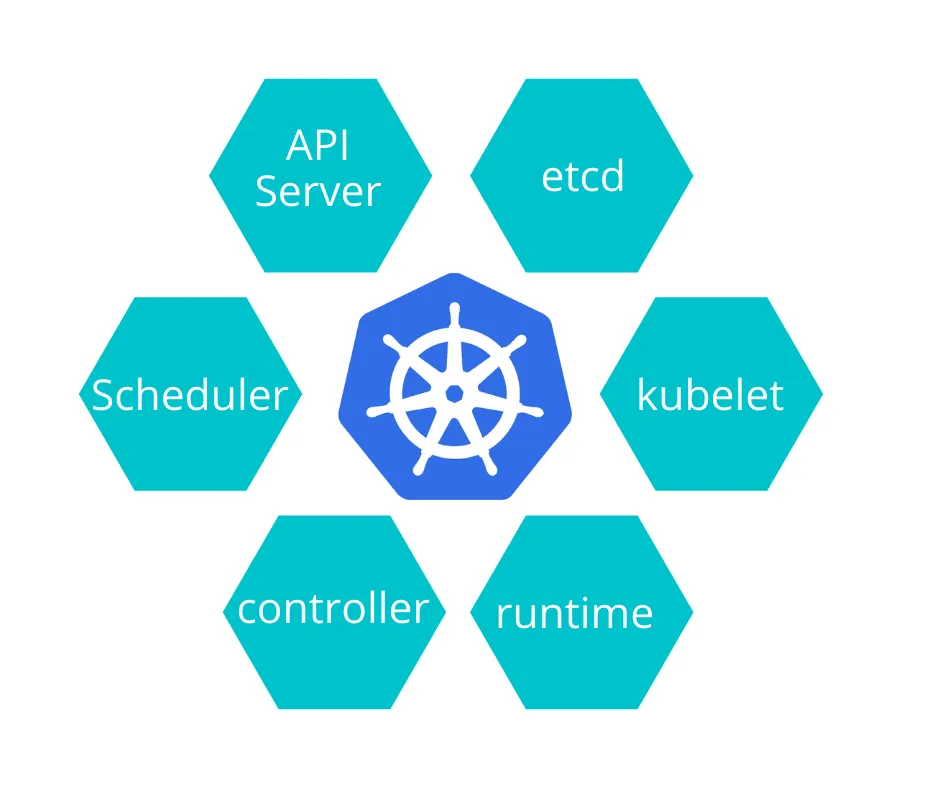

KUBERNETES: Components

API Server: The API server acts as the front for the Kubernetes; the users, management devices, CLI all talk to the API server to interact with the Kubernetes cluster.

etcd: It is the distributed key value stored to save all the data used to manage the cluster. It is also responsible for implementing the locks within the cluster to ensure there are no conflicts between the master.

Scheduler: It is responsible for distributing the work or container across multiple nodes. It looks for new containers and assigns node to them.

Controller: Controller is the brain behind the orchestration. It is responsible for noticing and responding when nodes, containers or end points goes down. It brings up the decision to bring up new containers when needed.

Runtime: It is the underlying software that is used to run the software (docker).

kubelet: It is the agent that runs on each node in the cluster. The Agent is also responsible to make sure containers are running as expected.

Before winding this up, lets look at the kubectl control tool or the command line tool that is used to deploy and manage applications on a cluster.

kubectl run hello-minikube used to deploy an application on the cluster

kubectl cluster-info view information about the cluster

kubectl get nodes get all the nodes of the cluster